What aids and impedes donors using evidence to make their giving more effective? This question motivated a two researchers at the University of Birmingham to do a wide search of the academic and non-academic literature to find studies that provide answers. The findings are in a systematic review published last month. It’s remarkable. It finds that we – the human race – don’t really know yet what aids and impedes donors using evidence – because nobody has yet investigated properly.

This matters because some interventions run by charities are harmful. Some produce no benefit at all. And even interventions which do succeed vary hugely in how much good they do. So it can be literally vital that donors choose the right ones. Only sound evidence of effectiveness of giving can reliably guide donors to them.

The researchers – Caroline Greenhalgh and Paul Montgomery – found only nine documents that fit their inclusion criteria. None of them is by an academic, and none is published in an academic journal: they’re all published ‘informally’ by non-academics, e.g., a report for and published the European Venture Philanthropy Association, a 4-page article in Philanthropy Impact Magazine. So none of those nine documents has undergone peer review – which is a quality control system though has been widely shown to be defective.

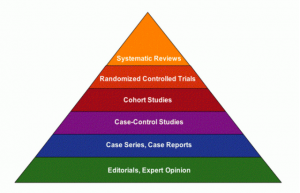

Of those nine documents, only three are empirical studies involving actual donors / funders. The rest are discussion papers, documents plotting how the academic study of philanthropy might evolve, or reports of musings of umbrella bodies and ‘experts’ about what influences donors. In the well-established hierarchy of evidence about the effectiveness of interventions (which the systematic review cites), ‘expert opinion’ is at the bottom – if it is even included at all.

Of those nine documents, only three are empirical studies involving actual donors / funders. The rest are discussion papers, documents plotting how the academic study of philanthropy might evolve, or reports of musings of umbrella bodies and ‘experts’ about what influences donors. In the well-established hierarchy of evidence about the effectiveness of interventions (which the systematic review cites), ‘expert opinion’ is at the bottom – if it is even included at all.

Those three studies which involve actual donors all simply ask their opinion about their own behaviour – and some ask what would get them to behave differently, e.g., to use more evidence. They are a survey of US donors, and two sets of interviews with UK foundations. (To be fair, those three studies may not have had the exact same research question as the systematic review. Studies can be included in a systematic review if they pertain to its research question, even if they’re not a direct run at it.)

Are surveys and interviews a good way to identify what aids and impedes behaviour, and what would get people to behave differently?

Let me tell you a story. Once upon a time, researcher Dean Karlan was investigating microloans to poor people in South Africa, and what encourages people to take them. He sent people flyers with various designs and offering loans at various rates and sizes. It turns out that giving consumers only one choice of loan size, rather than four, increased their take-up of loans as much as if the lender had reduced the interest rate by about 20 percent. And if the flyer features a picture of a woman, people will pay more for their loan – demand was as high as if the lender had reduced the interest rate by about a third.

Nobody would say in a survey or interview that they would pay more if a flyer has a lady on it. But they do. Similarly, Nobel Laureate Daniel Kahneman reports that, empirically, people are more likely to be believe a statement if it is written in red than in green. But nobody would say that in a survey, not least because we don’t know it about ourselves.

There are many such examples. This is why commercial marketers do experiments. They run randomised controlled trials (which they often call A/B tests). They run conjoint analysis: in which customers are offered goods with various combinations of characteristics and price – maybe a pink car with a stereo for £1,000, a pink car without a stereo for £800, a blue car for £1,100 and a blue car without a stereo for £950 – to identify how much customers value each characteristic. They film customers in shops to observe how long they spend making particular purchase decisions.

They don’t just ask customers what they do, or what they would to under certain conditions. We don’t know what we each actually do, let alone what we would do in different circumstances. Plus people often have reason to lie to researchers, e.g., to say what they think they ought to say. Interviews and surveys are not good research methods for these questions.

Experiments are possible – and necessary

Back to philanthropy. Given that apparently the only three studies with donors to identify what aids and hinders donors using evidence just asked their opinion, it seems that we don’t know. Finding out would involve doing experiments[1] to observe what donors and funders actually do when presented with information of various types and packaged in various ways.

We don’t really know yet what aids and impedes donors using evidence – because nobody has yet investigated properly

Such experiments are perfectly possible. For example, consider the related question of what will get policy-makers to use evidence in their decisions. People have run experiments to find out. Gautam Rao at Harvard and others ran an experiment in Brazil with over 2,000 mayors(!) They found that simply informing mayors about research about a simple and effective policy (sending reminder letters to taxpayers) increased the probability that the mayor’s municipality implements the policy by 10 percentage points. They also found that mayors will even pay to have information from such research, and they will pay more to have the answer from studies with larger samples.

Another study underway elsewhere looks at influencing educational policy. Some of the policy-makers in the study are given a financial incentive to improve educational attainment in their district, and others are not; and some of the policy-makers are given evidence about how to achieve that, packaged in one of two ways, and some of them are not. This clever experiment should distinguish the effect of information from the effect of incentives in getting policy-makers to use evidence.

We must apply these methods to philanthropy

We could perfectly well run experiments like this in philanthropy to find out what gets donors to use more evidence. Take a reasonably large set of donors (those with CAF accounts, for example); identify those who have previously funded some sector, such as education in low- and middle-income countries. Divide them into several groups; one group gets sent nothing, and the others receive evidence about what works and what doesn’t in that sector, presented in various ways. Then look at whether and how the giving behaviours of those groups change.

Of course, we don’t need all donors to engage with the evidence themselves: it’s fine if they rely on somebody competent to have found and interpreted it for them. If a donor funds organisations funded by, say, Co-Impact, or most of those recommended by GiveWell, for example, they will be giving to organisations which are based on sound evidence (or which are building the evidence base) but without the donor having to read the evidence themselves.

But on the question of what aids and hinders donors and funders in using evidence themselves, to fund the best work and avoid harmful work, we don’t know. We could find out. There is work to do.

Caroline Fiennes is the Director of Giving Evidence. Follow her on Twitter at @carolinefiennes.

Comments (0)